I started this series of posts in: Digital

Accountability

and

Transparency

Act

(DATA

Act) [The Details], where I concluded the Data Act had the following characteristics:

- Secretary of the Treasury has one (1) year to design a common data format for unknown financial data in Federal agencies.

- Federal agencies have one (1) year to comply with the common data format from the Secretary of the Treasure.

- No penalties or bonuses for the Secretary of the Treasury.

- No penalties or bonuses for Federal agencies failing to comply.

- No funding for the Secretary of the Treasury to carry out the assigned duties.

- No funding for Federal agencies to carry out the assigned duties.

As written, the Digital

Accountability

and

Transparency

Act

(DATA

Act) will be DOA (Dead On Arrival) in the current or any future session of Congress.

There are three (3) main reasons why that is the case.

A Common Data Format

Let me ask a dumb question: Do you remember 9/11?

Of course you do. And the United States has been in a state of war on terrorism every since.

I point that out because intelligence sharing (read common data format) was identified as a reason why the 9/11 attacks weren’t stopped and has been a high priority to solve since then.

Think about that: Reason why the attacks weren’t stopped and a high priority to correct.

This next September 11th will be the twelfth anniversary of those attacks.

Progress on intelligence sharing: Progress Made and Challenges Remaining in Sharing Terrorism-Related Information which I gloss in Read’em and Weep, along with numerous other GAO reports on intelligence sharing.

The good news is that we are less than five (5) years away from some unknown level of intelligence sharing.

The bad news is that puts us sixteen (16) years after 9/11 with some unknown level of intelligence sharing.

And that is for a subset of the entire Federal government.

A smaller set than will be addressed by the Secretary of the Treasury.

Common data format in a year? Really?

To say nothing of the likelihood of agencies changing the multitude of systems they have in place in a year.

No penalties or bonuses

You can think of this as the proverbial carrot and stick if you like.

What incentive does either the Secretary of the Treasury and/or Federal agencies have to engage in this fool’s errand pursuing a common data format?

In case you have forgotten, both the Secretary of the Treasury and Federal agencies have obligations under their existing missions.

Missions which they are designed by legislation and habit to discharge before they turn to additional reporting duties.

And what happens if they discharge their primary mission but don’t do the reporting?

Oh, they get reported to Congress. And ranked in public.

As Ben Stein would say, “Wow.”

No Funding

To add insult to injury, there is no additional funding for either the Secretary of the Treasury or Federal agencies to engage in any of the activities specified by the Digital

Accountability

and

Transparency

Act

(DATA

Act).

As I noted above, the Secretary of the Treasury and Federal agencies already have full plates with their current missions.

Now they are to be asked to undertake unfamiliar tasks, creation of a chimerical “common data format” and submitting reports based upon it.

Without any addition staff, training, or other resources.

Directives without resources to fulfill them are directives that are going to fail. (full stop)

Tentative Conclusion

If you are asking yourself, “Why would anyone advocate the Digital

Accountability

and

Transparency

Act

(DATA

Act)?,” five points for your house!

I don’t know of anyone who understands:

- the complexity of Federal data,

- the need for incentives,

- the need for resources to perform required tasks,

who thinks the Digital

Accountability

and

Transparency

Act

(DATA

Act) is viable.

Why advocate non-viable legislation?

Its non-viability make it an attractive fund raising mechanism.

Advocates can email, fund raise, telethon, rant, etc., to their heart’s content.

Advocating non-viable transparency lines an organization’s pocket at no risk of losing its rationale for existence.

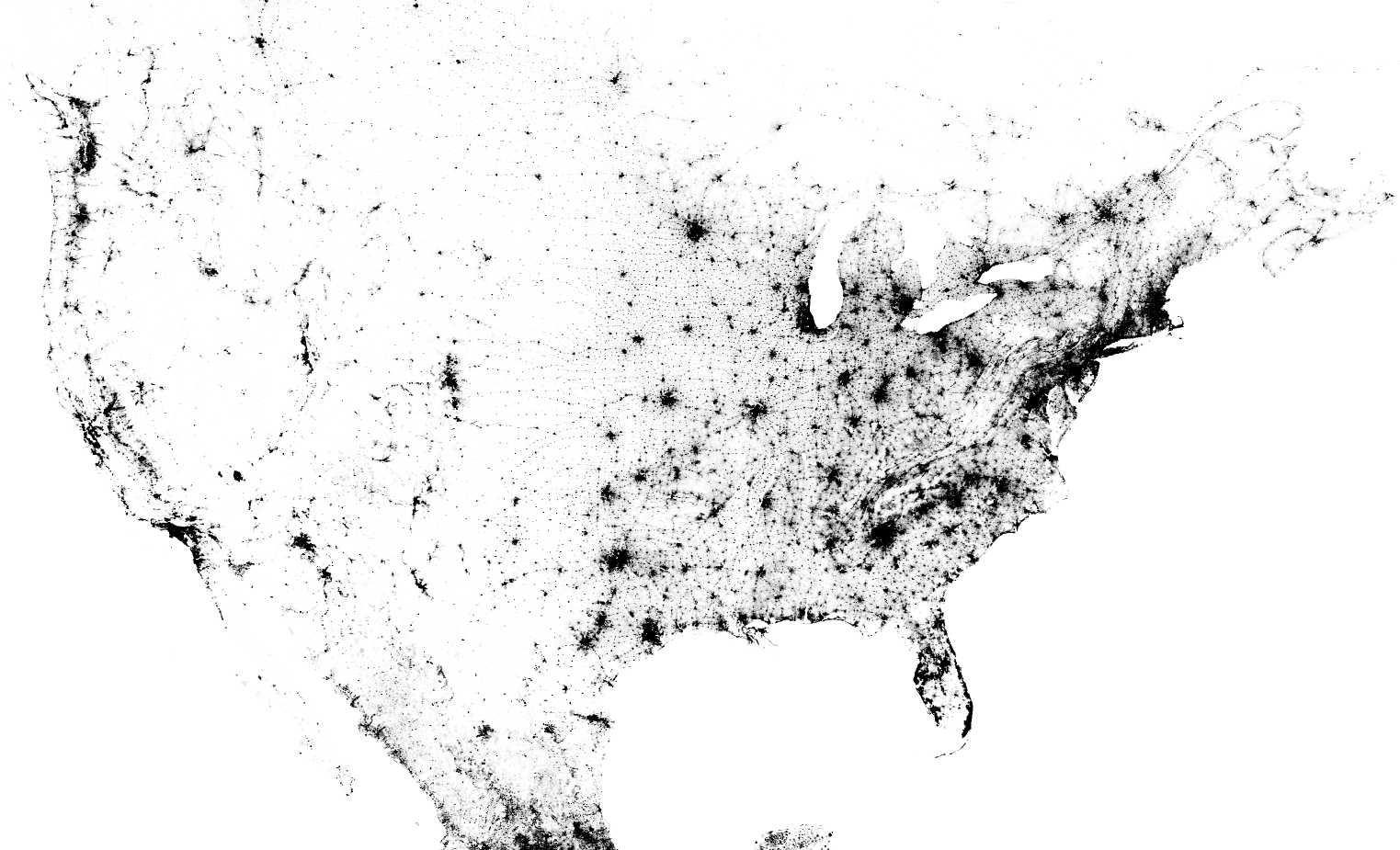

The third post in this series, suggesting a viable way forward, will appear tomorrow under: Transparency and the Digital Oil Drop.