Coffeehouse

From the about page:

Coffeehouse aggregates posts about data management from around the internet.

The idea for this site draws inspiration from other aggregators such as Ecobloggers and R-Bloggers.

Coffeehouse is a project of DataONE, the Data Observation Network for Earth.

Posts are lightly curated. That is, all posts are brought in, but if we see posts that aren’t on topic, we take them down from this blog. They are not of course taken down from the original poster, just this blog.

Recently added data blogs:

Archive and Data Management Training Center

We believe that the character and structure of the social science research environment determines attitudes to re-use.

We also believe a healthy research environment gives researchers incentives to confidently create re-usable data, and for data archives and repositories to commit to supporting data discovery and re-use through data enhancement and long-term preservation.

The purpose of our center is to ensure excellence in the creation, management, and long-term preservation of research data. We promote the adoption of standards in research data management and archiving to support data availability, re-use, and the repurposing of archived data.

Our desire is to see the European research area producing quality data with wide and multipurpose re-use value. By supporting multipurpose re-use, we want to help researchers, archives and repositories realize the intellectual value of public investment in academic research. (From the “about” page for the Archive and Data Management Training Center website but representative of the blog as well)

Data Ab Initio

My name is Kristin Briney and I am interested in all things relating to scientific research data.

I have been in love with research data since working on my PhD in Physical Chemistry, when I preferred modeling and manipulating my data to actually collecting it in the lab (or, heaven forbid, doing actual chemistry). This interest in research data led me to a Master’s degree in Information Studies where I focused on the management of digital data.

This blog is something I wish I had when I was a practicing scientist: a resource to help me manage my data and navigate the changing landscape of research dissemination.

Digital Library Blog (Stanford)

The latest news and milestones in the development of Stanford’s digital library–including content, new services, and infrastructure development.

Dryad News and Views

Welcome to Dryad news and views, a blog about news and events related to the Dryad digital repository. Subscribe, comment, contribute– and be sure to Publish Your Data!

Dryad is a curated general-purpose repository that makes the data underlying scientific publications discoverable, freely reusable, and citable. Any journal or publisher that wishes to encourage data archiving may refer authors to Dryad. Dryad welcomes data submissions related to any published, or accepted, peer reviewed scientific and medical literature, particularly data for which no specialized repository exists.

Journals can support and facilitate their authors’ data archiving by implementing “submission integration,” by which the journal manuscript submission system interfaces with Dryad. In a nutshell: the journal sends automated notifications to Dryad of new manuscripts, which enables Dryad to create a provisional record for the article’s data, thereby streamlining the author’s data upload process. The published article includes a link to the data in Dryad, and Dryad links to the published article.

The Dryad documentation site provides complete information about Dryad and the submission integration process.

Dryad staff welcome all inquiries. Thank you.

<tamingdata/>

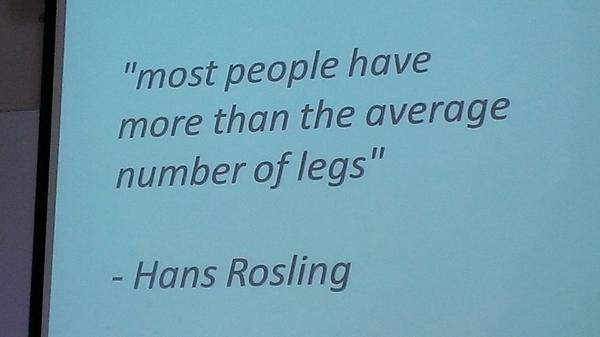

The data deluge refers to the increasingly large and complex data sets generated by researchers that must be managed by their creators with “industrial-scale data centres and cutting-edge networking technology” (Nature 455) in order to provide for use and re-use of the data.

The lack of standards and infrastructure to appropriately manage this (often tax-payer funded) data requires data creators, data scientists, data managers, and data librarians to collaborate in order to create and acquire the technology required to provide for data use and re-use.

This blog is my way of sorting through the technology, management, research and development that have come together to successfully solve the data deluge. I will post and discuss both current and past R&D in this area. I welcome any comments.

There are fourteen (14) data blogs to date feeding into Coffeehouse. Unlike some data blog aggregations, ads do not overwhelm content at Coffeehouse.

If you have a data blog, please consider adding it to Coffeehouse. Suggest that other data bloggers do the same.