Twitter open sourced a recommendation algorithm for massive datasets by Derrick Harris.

From the post:

Late last month, Twitter open sourced an algorithm that’s designed to ease the computational burden on systems trying to recommend content — contacts, articles, products, whatever — across seemingly endless sets of possibilities. Called DIMSUM, short for Dimension Independent Matrix Square using MapReduce (rolls off the tongue, no?), the algorithm trims the list of potential combinations to a reasonable number, so other recommendation algorithms can run in a reasonable amount of time.

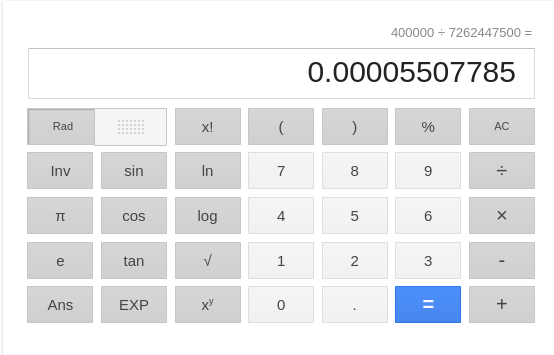

Reza Zadeh, the former Twitter data scientist and current Stanford consulting professor who helped create the algorithm, describes it in terms of the famous handshake problem. Two people in a room? One handshake; no problem. Ten people in a room? Forty-five handshakes; still doable. However, he explained, “The number of handshakes goes up quadratically … That makes the problem very difficult when x is a million.”

Twitter claims 271 million active users.

DIMSUM works primarily in two different areas: (1) matching promoted ads with the right users, and (2) suggesting similar people to follow after users follow someone. Running through all the possible combinations would take days even on a large cluster of machines, Zadeh said, but sampling the user base using DIMSUM takes significantly less time and significantly fewer machines.

…

The “similarity” of two or more people or bits of content is a variation on the merging rules of the TMDM.

In recommendation language, two or more topics are “similar” if:

- at least one equal string in their [subject identifiers] properties,

- at least one equal string in their [item identifiers] properties,

- at least one equal string in their [subject locators] properties,

- an equal string in the [subject identifiers] property of the one topic item and the [item identifiers] property of the other, or

- the same information item in their [reified] properties.

TMDM 5.3.5 Properties

The TMDM says “equal” and not “similar” but the point being that you can arbitrarily decide on how “similar” two or more topics must be in order to trigger merging.

That realization opens up the entire realm of “similarity” and “recommendation” algorithms and techniques for application to topic maps.

Which brings us back to the algorithm just open sourced by Twitter.

With DIMSUM, you don’t have to do a brute force topic by topic comparison for merging purposes. Some topics will not meet a merging “threshold” and not be considered by merging routines.

Of course, with the TMDM, merging being either true or false, you may be stuck with brute force. Suggestions?

But if you have other similarity measures, you may be able to profit from DIMSUM.

BTW, I would not follow #dimsum on Twitter because it is apparently a type of dumpling. 😉

Update: All-pairs similarity via DIMSUM DIMSUM has been implemented in Spark!