Alicia Shepard in A few weeks after the Panama Papers’ release, The New York Times and Washington Post start digging in caught me off guard with:

Many newspapers aren’t comfortable with ICIJ’s “radical sharing” concept.

Suspecting Alicia was using “sharing” to mean something beyond my experience, I had to read her post!

Alicia explains the absence of the New York Times and the Washington Post from the initial reporting on the Panama Papers saying:

Why weren’t the Times or the Post included originally? Walker said that, in general, many newspapers are not comfortable with ICIJ’s “radical sharing” concept, in which all journalists who agree to collaborate must promise to share their reporting, protect confidentiality, not share the data, and publish when ICIJ gives the go-ahead.

I see. “Radical sharing,” means collaborating on research, a good thing, protecting confidentiality, another good thing, then being bound to not share the data (restricting the data to ICIJ approved participants), a bad thing, and publishing when allowed by the ICIJ, another bad thing.

Not what I would consider “radical” sharing but I can see why newspapers, like many traditional publishers, fear the sharing of research. Even though sharing of research in other areas has been proven to float all boats higher.

The lizard brain reflex against sharing still dominates in many areas of human endeavor. News reporting in particular.

Alicia also quotes Marina Walker saying:

“We are excited to be working with The New York Times and The Washington Post, two of the world’s best newspapers,” said Marina Walker, deputy director of the Washington, D.C.–based ICIJ. “Both of them signed up at more or less the same time, two or three weeks ago. Both teams were recently trained by ICIJ researchers and reporters on how to use the data and we continue to assist them as needed, like we do with other partners. So far, so good.”

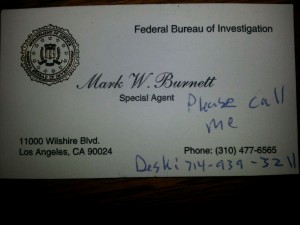

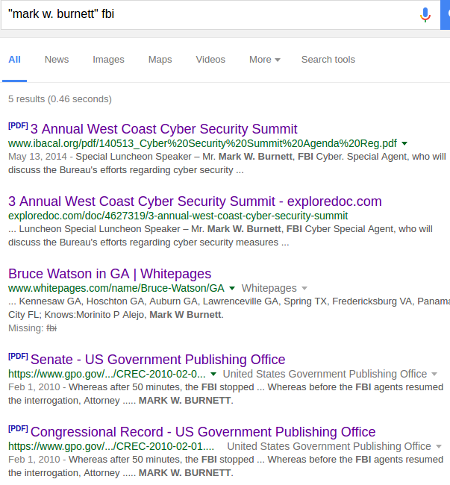

The “smoking gun” for my suggestion in Panama Papers – Shake That Money Maker that the ICIJ are hoarding the Panama Papers for their own power and profit.

The ICIJ wants control over the data, realizing that training and assistance are never free, to dictate who sees the data and when they can publish using the data.

Combine that with the largest data leak to date and the self-service nature of the claim the data might reveal the leaker becomes self-evident.

Hoarding data for profit is, as I have said, understandable and to some degree even reasonable.

But let’s have that conversation and not one based on specious claims about a leaker’s or public’s interest.

PS: Getting to dictate to the Washington Post and the New York Times must be heady stuff.

PPS: Any Panama Paper secondary leakers yet?