Executive Summary:

If you reported Vault 7: CIA Hacking Tools Revealed as containing:

8,761 documents and files from an isolated, high-security network situated inside the CIA’s Center for Cyber Intelligence in Langley, Virgina…. (Vault 7: CIA Hacking Tools Revealed)

you failed to check your facts.

I detail my process below but in terms of numbers:

- Of 7809 HTML files, 6675 are duplicates or Wikileaks artifacts

- Of 357 PDF files, 134 are Wikileaks artifacts (for materials not released). Of the remaining 223 PDF files, 109 of them are public information, the GNU Make Manual for instance. Out of the 357 pdf files, Wikileaks has delivered 114 arguably from the CIA and some of those are doubtful. (Part 2, forthcoming)

Wikileaks haters will find little solace here. My criticisms of Wikileaks are for padding the leak and not enabling effective use of the leak. Padding the leak is obvious from the inclusion of numerous duplicate and irrelevant documents. Effective use of the leak is impaired by the padding but also by teases of what could have been released but wasn’t.

Getting Started

To start on common ground, fire up a torrent client, obtain and decompress: Wikileaks-Year-Zero-2017-v1.7z.torrent.

Decompression requires this password: SplinterItIntoAThousandPiecesAndScatterItIntoTheWinds

The root directory is year0.

When I run a recursive ls from above that directory:

ls -R year0 | wc -l

My system reports: 8820

Change to the year0 directory and ls reveals:

bootstrap/ css/ highlighter/ IMG/ localhost:6081@ static/ vault7/

Checking the files in vault7:

ls -R vault7/ | wc -l

returns: 8755

Change to the vault7 directory and ls shows:

cms/ files/ index.html logo.png

The directory files/ has only one file, org-chart.png. An organization chart of the CIA but with sub-departments are listed with acronyms and “???.” Did the author of the chart not know the names of those departments? I point that out as the first of many file anomalies.

Some 7809 HTML files are found under cms/.

The cms/ directory has a sub-directory files, plus main.css and 7809 HTML files (including the index.html file).

Duplicated HTML Files

I discovered duplication of the HTML files quite by accident. I had prepared the files with Tidy for parsing with Saxon and compressed a set of those files for uploading.

The 7808 files I compressed started at 296.7 MB.

The compressed size, using 7z, was approximately 3.5 MB.

That’s almost 2 order of magnitude of compression. 7z is good, but it’s not quite that good. 😉

Checking my file compression numbers

You don’t have to take my word for the file compression experience. If you select all the page_*, space_* and user_* HTML files in a file browser, it should report a total size of 296.7 MB.

Create a sub-directory to year0/vault7/cms/, say mkdir htmlfiles and then:

cp *.html htmlfiles

Then: cd htmlfiles

and,

7z a compressedhtml.7z *.html

Run: ls -l compressedhtml.7z

Result: 3488727 Mar 16 16:31 compressedhtml.7z

Tom Harris, in How File Compression Works, explains that:

…

Most types of computer files are fairly redundant — they have the same information listed over and over again. File-compression programs simply get rid of the redundancy. Instead of listing a piece of information over and over again, a file-compression program lists that information once and then refers back to it whenever it appears in the original program.

…

If you don’t agree the HTML file are highly repetitive, check the next section where one source of duplication is demonstrated.

Demonstrating Duplication of HTML files

Let’s start with the same file as we look for a source of duplication. Load Cinnamon Cisco881 Testing at Wikileaks into your browser.

Scroll to near the bottom of the file where you see:

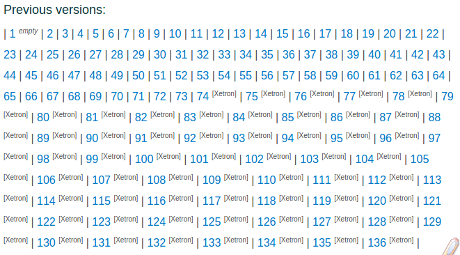

Yes! There are 136 prior versions of this alleged CIA file in the directory.

Cinnamon Cisco881 Testinghas the most prior versions but all of them have prior versions.

Are we now in agreement that duplicated versions of the HTML pages exist in the year0/vault7/cms/ directory?

Good!

Now we need to count how many duplicated files there are in year0/vault7/cms/.

Counting Prior Versions of the HTML Files

You may or may not have noticed but every reference to a prior version takes the form:

<a href=”filename.html”>integer</a*gt;

That going to be an important fact but let’s clean up the HTML so we can process it with XQuery/Saxon.

Preparing for XQuery

Before we start crunching the HTML files, let’s clean them up with Tidy.

Here’s my Tidy config file:

output-xml: yes

quote-nbsp: no

show-warnings: no

show-info: no

quiet: yes

write-back: yes

In htmlfiles I run:

tidy -config tidy.config *.html

Tidy reports two errors:

line 887 column 1 - Error:

line 887 column 15 - Error:

Grepping for “declarations>”:

grep "declarations" *.html

Returns:

page_26345506.html:<declarations><string name="½ö"></string></declarations><p>›<br>

The string element is present as well so we open up the file and repair it with XML comments:

<!-- <declarations><string name="½ö"></string></declarations><p>›<br> -->

<!-- prior line commented out to avoid Tidy error, pld 14 March 2017-->

Rerun Tidy:

tidy -config tidy.config *.html

Now Tidy returns no errors.

XQuery Finds Prior Versions

Our files are ready to be queried but 7809 is a lot of files.

There are a number of solutions but a simple one is to create an XML collection of the documents and run our XQuery statements across the files as a set.

Here’s how I created a collection file for these files:

I did an ls in the directory and piped that to collection.xml. Opening the file I deleted index.html, started each entry with <doc href=" and ended each one with "/>, inserted <collection> before the first entry and </collection> after the last entry and then saved the file.

Your version should look something like:

<collection> <doc href="page_10158081.html"/> <doc href="page_10158088.html"/> <doc href="page_10452995.html"/> ... <doc href="user_7995631.html"/> <doc href="user_8650754.html"/> <doc href="user_9535837.html"/> </collection>

The prior versions in Cinnamon Cisco881 Testing from Wikileaks, have this appearance in HTML source:

<h3>Previous versions:</h3>

<p>| <a href=”page_17760540.html”>1</a> <span class=”pg-tag”><i>empty</i></span>

| <a href=”page_17760578.html”>2</a> <span class=”pg-tag”></span>…..

| <a href=”page_23134323.html”>135</a> <span class=”pg-tag”>[Xetron]</span>

| <a href=”page_23134377.html”>136</a> <span class=”pg-tag”>[Xetron]</span>

|</p>

</div>

You will need to spend some time with the files (I have obviously) to satisfy yourself that <a> elements that contain only numbers are exclusively used for prior references. If you come across any counter-examples, I would be very interested to hear about them.

To get a file count on all the prior references, I used:

let $count := count(collection('collection.xml')//a[matches(.,'^\d+$')])

return $count

Run that script to find: 6514 previous editions of the base files

Unpacking the XQuery

Rest assured that’s not how I wrote the first XQuery on this data set! 😉

Without exploring all the by-ways and alleys I traversed, I will unpack that query.

First, the goal of the query is to identify every <a> element that only contains digits. Recalling that previous versions link have digits only in their <a> elements.

A shout out to Jonathan Robie, Editor of XQuery, for reminding me that string expressions match substrings unless they are have beginning and ending line anchors. Here:

'^\d+$'

The \d matches only digits, the + enables matching 1 or more digits, and the beginning ^ and ending $ eliminate any <a> elements that might start with one or more digits, but also contains text. Like links to files, etc.

Expanding out a bit more, [matches(.,'^\d+$')], the [ ] enclose a predicate that consist of the matches function, which takes two arguments. The . here represents the content of an <a> element, followed by a comma as a separator and then the regex that provides the pattern to match against.

Although talked about as a “code smell,” the //a in //a[matches(.,'^\d+$')] enables us to pick up the <a> elements wherever they are located. We did have to repair these HTML files and I don’t want to spend time debugging ephemeral HTML.

Almost there! The collection file, along with the collection function, collection('collection.xml') enables us to apply the XQuery to all the files listed in the collection file.

Finally, we surround all of the foregoing with the count function: count(collection('collection.xml')//a[matches(.,'^\d+$')]) and declare a variable to capture the result of the count function: let $count :=

So far so good? I know, tedious for XQuery jocks but not all news reporters are XQuery jocks, at least not yet!

Then we produce the results: return $count.

But 6514 files aren’t 6675 files, you said 6675 files

Yes, your right! Thanks for paying attention!

I said at the top, 6675 are duplicates or Wikileaks artifacts.

Where are the others?

If you look at User #71477, which has the file name, user_40828931.html, you will find it’s not a CIA document but part of Wikileaks administration for these documents. There are 90 such pages.

If you look at Marble Framework, which has the file name, space_15204359.html, you find it’s a CIA document but a form of indexing created by Wikileaks. There are 70 such pages.

Don’t forget the index.html page.

When added together, 6514 (duplicates), 90 (user pages), 70 (space pages), index.html, I get 6675 duplicates or Wikileaks artifacts.

What’s your total?

Tomorrow:

In Fact Checking Wikileaks’ Vault 7: CIA Hacking Tools Revealed (Part 2), I look under year0/vault7/cms/files to discover:

- Arguably CIA files (maybe) – 114

- Public documents – 109

- Wikileaks artifacts – 134

I say “Arguably CIA” because there are file artifacts and anomalies that warrant your attention in evaluating those files.