The New York Times, sensing a possible defeat of its neo-liberal agenda on November 8, 2016, has loosed the dogs of war on social media in general and Wikileaks in particular.

Consider the sleight of hand in Farhad Manjoo’s How the Internet Is Loosening Our Grip on the Truth, which argues on one hand,

…

You’re Not Rational

The root of the problem with online news is something that initially sounds great: We have a lot more media to choose from.

In the last 20 years, the internet has overrun your morning paper and evening newscast with a smorgasbord of information sources, from well-funded online magazines to muckraking fact-checkers to the three guys in your country club whose Facebook group claims proof that Hillary Clinton and Donald J. Trump are really the same person.

A wider variety of news sources was supposed to be the bulwark of a rational age — “the marketplace of ideas,” the boosters called it.

But that’s not how any of this works. Psychologists and other social scientists have repeatedly shown that when confronted with diverse information choices, people rarely act like rational, civic-minded automatons. Instead, we are roiled by preconceptions and biases, and we usually do what feels easiest — we gorge on information that confirms our ideas, and we shun what does not.

This dynamic becomes especially problematic in a news landscape of near-infinite choice. Whether navigating Facebook, Google or The New York Times’s smartphone app, you are given ultimate control — if you see something you don’t like, you can easily tap away to something more pleasing. Then we all share what we found with our like-minded social networks, creating closed-off, shoulder-patting circles online.

…

This gets to the deeper problem: We all tend to filter documentary evidence through our own biases. Researchers have shown that two people with differing points of view can look at the same picture, video or document and come away with strikingly different ideas about what it shows.

…

You caught the invocation of authority by Manjoo, “researchers have shown,” etc.

But did you notice he never shows his other hand?

If the public is so bat-shit crazy that it takes all social media content as equally trustworthy, what are we to do?

Well, that is the question isn’t it?

Manjoo invokes “dozens of news outlets” who are tirelessly but hopelessly fact checking on our behalf in his conclusion.

The strong implication is that without the help of “media outlets,” you are a bundle of preconceptions and biases doing what feels easiest.

“News outlets,” on the other hand, are free from those limitations.

You bet.

If you thought Majoo was bad, enjoy seething through Zeynep Tufekci’s claims that Wikileaks is an opponent of privacy, sponsor of censorship and opponent of democracy, all in a little over 1,000 words (1069 exact count). Wikileaks Isn’t Whistleblowing.

It’s a breath taking piece of half-truths.

For example, playing for your sympathy, Tufekci invokes the need of dissidents for privacy. Even to the point of invoking the ghost of the former Soviet Union.

Tufekci overlooks and hopes you do as well, that these emails weren’t from dissidents, but from people who traded in and on the whims and caprices at the pinnacles of American power.

Perhaps realizing that is too transparent a ploy, she recounts other data dumps by Wikileaks to which she objects. As lawyers say, if the facts are against you, pound on the table.

In an echo of Manjoo, did you know you are too dumb to distinguish critical information from trivial?

Tufekci writes:

…

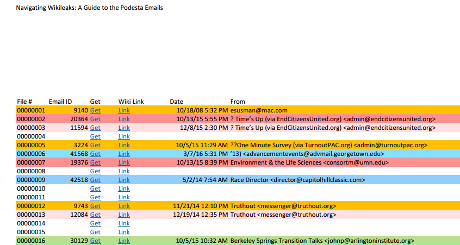

These hacks also function as a form of censorship. Once, censorship worked by blocking crucial pieces of information. In this era of information overload, censorship works by drowning us in too much undifferentiated information, crippling our ability to focus. These dumps, combined with the news media’s obsession with campaign trivia and gossip, have resulted in whistle-drowning, rather than whistle-blowing: In a sea of so many whistles blowing so loud, we cannot hear a single one.

…

I don’t think you are that dumb.

Do you?

But who will save us? You can guess Tufekci’s answer, but here it is in full:

…

Journalism ethics have to transition from the time of information scarcity to the current realities of information glut and privacy invasion. For example, obsessively reporting on internal campaign discussions about strategy from the (long ago) primary, in the last month of a general election against a different opponent, is not responsible journalism. Out-of-context emails from WikiLeaks have fueled viral misinformation on social media. Journalists should focus on the few important revelations, but also help debunk false misinformation that is proliferating on social media.

…

If you weren’t frightened into agreement by the end of her parade of horrors:

…

We can’t shrug off these dangers just because these hackers have, so far, largely made relatively powerful people and groups their targets. Their true target is the health of our democracy.

So now Wikileaks is gunning for democracy?

You bet. 😉

Journalists of my youth, think Vietnam, Watergate, were aggressive critics of government and the powerful. The Panama Papers project is evidence that level of journalism still exists.

Instead of whining about releases by Wikileaks and others, journalists* need to step up and provide context they see as lacking.

It would sure beat the hell out of repeating news releases from military commanders, “justice” department mouthpieces, and official but “unofficial” leaks from the American intelligence community.

* Like any generalization this is grossly unfair to the many journalists who work on behalf of the public everyday but lack the megaphone of the government lapdog New York Times. To those journalists and only them, do I apologize in advance for any offense given. The rest of you, take such offense as is appropriate.