Tony Romm has a highly amusing account of how internet censors, Google, Facebook and Twitter, despite their own censorship efforts, object to social media screening on I-94 (think international arrival and departure) forms.

Tony writes Tech slams Homeland Security on social media screening:

Internet giants including Google, Facebook and Twitter slammed the Obama administration on Monday for a proposal that would seek to weed out security threats by asking foreign visitors about their social media accounts.

The Department of Homeland Security for months has weighed whether to prompt foreign travelers arriving on visa waivers to disclose the social media websites they use — and their usernames for those accounts — as it seeks new ways to spot potential terrorist sympathizers. The government unveiled its draft plan this summer amid widespread criticism that authorities aren’t doing enough to monitor suspicious individuals for signs of radicalization, including the married couple who killed 14 people in December’s mass shooting in San Bernardino, Calif.

But leading tech companies said Monday that the proposal could “have a chilling effect on use of social media networks, online sharing and, ultimately, free speech online.”

….

Google, Facebook and Twitter casually censor hundreds of thousands of users every year so their sudden concern for free speech is puzzling.

Until you catch the line:

have a chilling effect on use of social media networks, online sharing

Translation: chilling effect on market share of social media, diminished advertising revenues and online sales.

The reaction of Google, Facebook and Twitter reminds me of the elderly woman in church who would shout “Amen!” when the preacher talked about the dangers of alcohol, “Amen!” when he spoke against smoking, “Amen! when he spoke of the shame of gambling, but was curiously silent when the preacher said that dipping snuff was also sinful.

After the service, as the parishioners left the church, the preacher stopped the woman to ask about her change in demeanor. The woman said, “Well, but you went from preaching to meddling.”

😉

Speaking against terrorism, silencing users by the hundred thousand, is no threat to the revenue streams of Google, Facebook and Twitter. Easy enough and they benefit from whatever credibility that buys with governments.

Disclosure of social media use, which could have some adverse impact on revenue, the government has gone from preaching to meddling.

The revenue stream impacts imagined by Google, Facebook and Twitter are just that, imagined. Its impact in fact is unknown. But fear of an adverse impact is so great that all three have swung into frantic action.

That’s a good measure of their commitment to free speech versus their revenue streams.

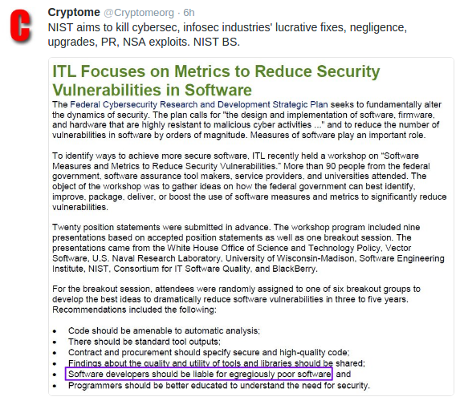

Having said all that, the Agency Information Collection Activities: Arrival and Departure Record (Forms I-94 and I-94W) and Electronic System for Travel Authorization is about as lame and ineffectual of an anti-terrorist proposal as I have seen since 9/11.

You can see the comments on the I-94 farce, which I started to collect but then didn’t.

I shouldn’t say this for free but here’s one insight into “radicalization:”

Use of social media to exchange “radical” messages is a symptom of “radicalization,” not its cause.

You can convince yourself of that fact.

Despite expensive efforts to stamp out child pornography (radical messages), sexual abuse of children (radicalization) continues. The consumption of child pornography doesn’t cause sexual abuse of children, rather it is consumed by sexual abusers of children. The market is driving the production of the pornography. No market, no pornography.

So why the focus on child pornography?

It’s visible (like social media), it’s easy to find (like tweets), it’s abhorrent (ditto for beheadings), and cheap (unlike uncovering real sexual abuse of children and/or actual terrorist activity).

The same factors explain the mis-guided and wholly ineffectual focus on terrorism and social media.