The DoJ is trying to force Apple to comply with FBI by Nicole Lee.

I mention this because Nicole includes a link to: Case 5:16-cm-00010-SP Document 1 Filed 02/19/16 Page 1 of 35 Page ID #:1, which is the GOVERNMENT’S MOTION TO COMPEL APPLE INC. TO COMPLY WITH THIS COURT’S FEBRUARY 16, 2016 ORDER COMPELLING ASSISTANCE IN SEARCH; EXHIBIT.

Whatever the Justice Department wants to contend to the contrary, a hearing date of March 22, 2016 on this motion is ample evidence that the government has no “urgent need” for information, if any, on the cell phone in question. The government’s desire to waste more hours and resources on dead suspects is quixotic at best.

Now that Baby Blue’s Manual of Legal Citation (Baby Blue’s) is online and legal citations are no long captives of the Bluebook® gang, tell me again how Baby Blue’s has increased public access to the law?

This is, after all, a very important public issue and the public should be able to avail itself of the primary resources.

You will find Baby Blue’s doesn’t help much in that regard.

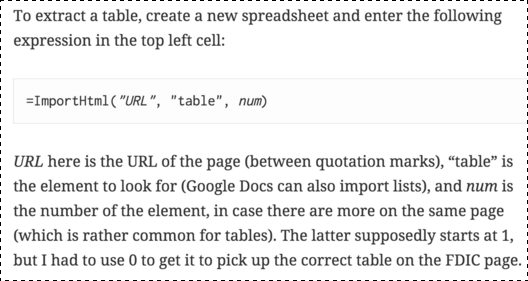

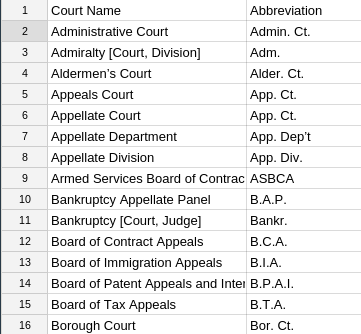

Contrast Baby Blue’s citation style advice with adding hyperlinks to the authorities cited in the Department of Justice’s “memorandum of points and authorities:”

Federal Cases

Central Bank of Denver v. First Interstate Bank of Denver, 551 U.S. 164 (1994).

General Construction Company v. Castro, 401 F.3d 963 (9th Cir. 2005)

In re Application of the United States for an Order Directing a Provider of Communication Services to Provide Technical Assistance to the DEA, 2015 WL 5233551, at *4-5 (D.P.R. Aug. 27, 2015)

In re Application of the United States for an Order Authorizing In-Progress Trace o Wire Commc’ns over Tel. Facilities (Mountain Bell), 616 F.2d 1122 (9th Cir. 1980)

In re Application of the United States for an Order Directing X to Provide Access to Videotapes (Access to Videotapes), 2003 WL 22053105, at *3 (D. Md. Aug. 22, 2003) (unpublished)

In re Order Requiring [XXX], Inc., to Assist in the Execution of a Search Warrant Issued by This Court by Unlocking a Cellphone (In re XXX) 2014 WL 5510865, at #2 (S.D.N.Y. Oct. 31, 2014)

Konop v. Hawaiian Airlines, Inc., 302 F.3d 868 (9th Cir. 2002)

Pennsylvania Bureau of Correction v. United States Marshals Service, 474 U.S. 34 (1985)

Plum Creek Lumber Co. v. Hutton, 608 F.2d 1283 (9th Cir. 1979)

Riley v. California, 134 S. Ct. 2473 (2014) [For some unknown reason, local rules must allow varying citation styles for U.S. Supreme Courts decisions.]

United States v. Catoggio, 698 F.3d 64 (2nd Cir. 2012)

United States v. Craft, 535 U.S. 274 (2002)

United States v. Fricosu, 841 F.Supp.2d 1232 (D. Co. 2012)

United States v. Hall, 583 F. Supp. 717 (E.D. Va. 1984)

United States v. Li, 55 F.3d 325, 329 (7th Cir. 1995)

United States v. Navarro, No. 13-CR-5525, ECF No. 39 (W.D. Wa. Nov. 13, 2013)

United States v. New York Telephone Co., 434 U.S. 159 (1977)

Federal Statutes

18 U.S.C. 2510

18 U.S.C. 3103

28 U.S.C. 1651

47 U.S.C. 1001

47 U.S.C. 1002

First, I didn’t format a one of these citations. I copied them “as is” into a search engine so Baby Blue’s played no role in those searches.

Second, I added hyperlinks to a variety of sources for both the case law and statutes to make the point that one citation can resolve to a multitude of places.

Some places are commercial and have extra features while others are non-commercial and may have fewer features.

If instead of individual hyperlinks, I had a nexus for each case, perhaps using its citation as its public name, then I could attach pointers to a multitude of resources that all offer the same case or statute.

If you have WestLaw, LexisNexis or some other commercial vendor, you could choose to find the citation there. If you prefer non-commercial access to the same material, you could choose one of those access methods.

That nexus is what we call a topic in topic maps (“proxy” in the TMRM) and it would save every user, commercial or non-commercial, the sifting of search results that I performed this afternoon.

The hyperlinks I used above make some of the law more accessible but not as accessible as it could be.

Creating a nexus/topic/proxy for each of these citations would enable users to pick pre-formatted citations (good-bye to formatting manuals for most of us) and the law material most accessible to them.

That sounds like greater public access to the law to me.

You?

Read the government’s “Memorandum of Points and Authorities” with a great deal of care.

For example:

The government is also aware of multiple other unpublished orders in this district and across the country compelling Apple to assist in the execution of a search warrant by accessing the data on devices running earlier versions of iOS, orders with which Apple complied.5

Be careful! Footnote 5 refers to a proceeding in the Eastern District of New York where the court sua sponte raised the issue of its authority under the All Writs Act. Footnote 5 recites no sources or evidence for the prosecutor’s claim of “…multiple other unpublished orders in this district and across the country….” None.

My impression is the government’s argument is mostly bluster and speculation. Plus repeating that Apple has betrayed its customers in the past and the government doesn’t understand its reluctance now. Business choices are not subject to government approval, or at least they weren’t the last time I read the U.S. Constitution.

Yes?