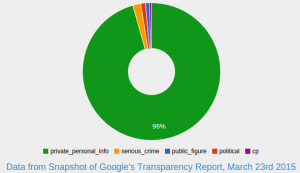

Graeme Burton writes in Check your NoSQL database – 600 terabytes of MongoDB data publicly exposed on the internet, that the inventor of Shodan, John Matherly, claims 595.2 terabytes (TB) of data are exposed in MongoDB instances without authentication.

On the MongoDB story, see: It’s the Data, Stupid! by John Matherly, if you want the story on collecting the data on MongoDB instances.

The larger item of interest is Shodan, “Shodan is the world’s first search engine for Internet-connected devices.”

Considering how well everyone has done with computer security to date, being able to search Internet-connected devices should not be problem. Yes?

From the homepage:

Explore the Internet of Things

Use Shodan to discover which of your devices are connected to the Internet, where they are located and who is using them.

See the Big Picture

Websites are just one part of the Internet. There are power plants, Smart TVs, refrigerators and much more that can be found with Shodan!

Monitor Network Security

Keep track of all the computers on your network that are directly accessible from the Internet. Shodan lets you understand your digital footprint.

Get a Competitive Advantage

Who is using your product? Where are they located? Use Shodan to perform empirical market intelligence.

My favorite is the last one, “…to perform empirical market intelligence.” You bet!

There are free accounts so I signed up for one to see what I could see. 😉

Here are some of the popular saved searches (that you can see with a free account):

- Webcam – best ip cam search I have found yet.

- Cams – admin admin

- Netcam – Netcam

- dreambox – dreambox

- default password – Finds results with “default password” in the banner; the named defaults might work!

- netgear – user: admin pass: password

- 108.223.86.43 – Trendnet IP Cam

- ssh – ssh

- Router w/ Default Info – Routers that give their default username/ password as admin/1234 in their banner.

- SCADA – SCADA systems search

With the free account, you can only see the first fifty (50) results for a search.

I’m not sure I agree that the pricing is “simple” but it is attractive. Note the difference between query credits and scan credits. The first applies to searches of the Shodan database and the second applies to networks you have targeted.

The 20K+ routers w/ default info could be a real hoot!

You know, this might be a cost effective alternative for the lower level NSA folks.

BTW, in case you are looking for it: the API documentation.

Definitely worth a bookmark and blog entries about your experiences with it.

It could well be that being insecure in large numbers is a form of cyberdefense.

Who is going to go after you when numerous larger, more well known targets are out there for the taking? And with IoT, the number of targets is going to increase geometrically.