The seriousness of the LogJam vulnerability was highlighted by John Leyden in Average enterprise ‘using 71 services vulnerable to LogJam’

…

Based on analysis of 10,000 cloud applications and data from more than 17 million global cloud users, cloud visibility firm Skyhigh Networks reckons that 575 cloud services are potentially vulnerable to man-in-the middle attacks. The average company uses 71 potentially vulnerable cloud services.

…

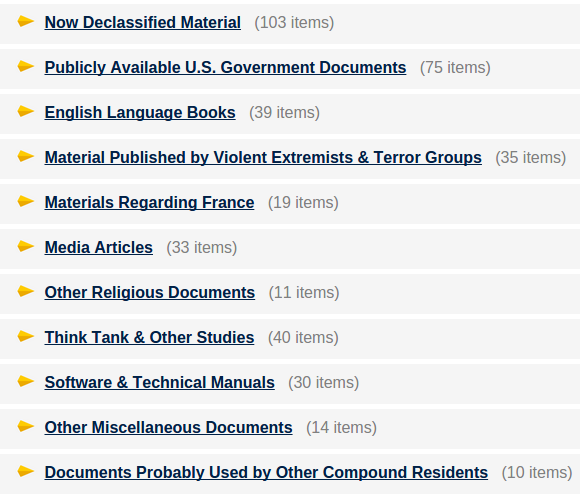

[Details from Skyhigh Networks]

The historical source of LogJam?

…

James Maude, security engineer at Avecto, said that the LogJam flaw shows how internet regulations and architecture decisions made more than 20 years ago are continuing to throw up problems.

“The LogJam issue highlights how far back the long tail of security stretches,” Maude commented. “As new technologies emerge and cryptography hardens, many simply add on new solutions without removing out-dated and vulnerable technologies. This effectively undermines the security model you are trying to build. Several recent vulnerabilities such as POODLE and FREAK have harnessed this type of weakness, tricking clients into using old, less secure forms of encryption,” he added.

Graham Cluley in Logjam vulnerability – what you need to know has better coverage of the history of weak encryption that resulted in the LogJam vulnerability.

What does that have to do with Postel’s Law?

TCP implementations should follow a general principle of robustness: be conservative in what you do, be liberal in what you accept from others. [RFC761]

As James Maude noted earlier:

As new technologies emerge and cryptography hardens, many simply add on new solutions without removing out-dated and vulnerable technologies.

Probably not what Postel intended at the time but certainly more “robust” in one sense of the word, technologies remain compatible with other technologies that use vulnerable technologies.

In other words, robustness is responsible for the maintenance of weak encryption and hence the current danger from LogJam.

This isn’t an entirely new idea. Eric Allman (Sendmail), warns of security issues with Postel’s Law in The Robustness Principle Reconsidered: Seeking a middle ground:

In 1981, Jon Postel formulated the Robustness Principle, also known as Postel’s Law, as a fundamental implementation guideline for the then-new TCP. The intent of the Robustness Principle was to maximize interoperability between network service implementations, particularly in the face of ambiguous or incomplete specifications. If every implementation of some service that generates some piece of protocol did so using the most conservative interpretation of the specification and every implementation that accepted that piece of protocol interpreted it using the most generous interpretation, then the chance that the two services would be able to talk with each other would be maximized. Experience with the Arpanet had shown that getting independently developed implementations to interoperate was difficult, and since the Internet was expected to be much larger than the Arpanet, the old ad-hoc methods needed to be enhanced.

Although the Robustness Principle was specifically described for implementations of TCP, it was quickly accepted as a good proposition for implementing network protocols in general. Some have applied it to the design of APIs and even programming language design. It’s simple, easy to understand, and intuitively obvious. But is it correct.

For many years the Robustness Principle was accepted dogma, failing more when it was ignored rather than when practiced. In recent years, however, that principle has been challenged. This isn’t because implementers have gotten more stupid, but rather because the world has become more hostile. Two general problem areas are impacted by the Robustness Principle: orderly interoperability and security.

…

Eric doesn’t come to a definitive conclusion with regard to Postel’s Law but the general case is always difficult to decide.

However, the specific case, supporting encryption known to be vulnerable shouldn’t be.

If there were a programming principles liability checklist, one of the tick boxes should read:

___ Supports (list of encryption schemes), Date:_________

Lawyers doing discovery can compare lists of known vulnerabilities as of the date given for liability purposes.

Programmers would be on notice that supporting encryption with known vulnerabilities is opening the door to legal liability.