Om sweet Om: (high-)functional frontend engineering with ClojureScript and React.

From the post:

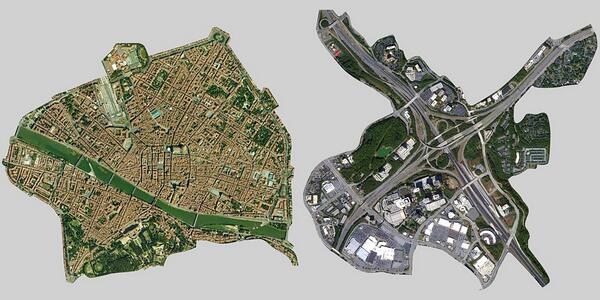

At Prismatic, we’re firm believers that great products come from a marriage of thoughtful design with rigorous engineering. Effective design requires making educated guesses about what works, building out solutions to test these hypotheses quickly, and iterating based on the results. For example, if you’ve read about our recent feed redesign, then you know that we tested three very different feed layouts in the past year before landing on a design that we and most of our users are quite happy with.

Constant experimentation and iteration presents us with an interesting technical challenge: creating a frontend architecture that allows us to build and test designs quickly, while maintaining acceptable performance for our users.

Specifically, (like most software engineering teams) our primary engineering goals are to maximize productivity and team participation by writing code that:

- is modular, with minimal coupling between independent components;

- is simple and readable; and

- has as few bugs as possible.

In our experience developing web, iOS, and backend applications, we’ve found that much (if not most) coupling, complexity, and bugs are a direct result of managing changes to application state. With ClojureScript and Om (a ClojureScript interface to React), we’ve finally found an architecture that shoulders most of this burden for us on the web. Two months ago, we rewrote our webapp in this architecture, and it’s been a huge boost to our productivity while maintaining snappy runtime performance.

…

Detailed and very interesting post on a functional approach to UI engineering.

And the promise of more posts to come Om.

One minor quibble with the engineering goal: “has as few bugs as possible.” That isn’t a goal or at least not a realistic one. There is no known measure for approaching the boundary of “as few bugs as possible.” Without a measure, it’s hard to call it a goal.

I first saw this in a tweet by David Nolen.