The Distributed Complexity of Large-scale Graph Processing by Hartmut Klauck, Danupon Nanongkai, Gopal Pandurangan, Peter Robinson.

Abstract:

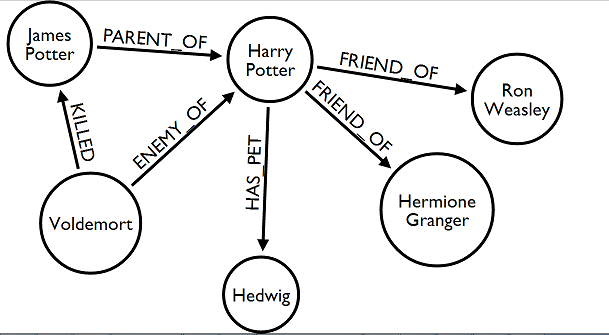

Motivated by the increasing need for fast distributed processing of large-scale graphs such as the Web graph and various social networks, we study a message-passing distributed computing model for graph processing and present lower bounds and algorithms for several graph problems. This work is inspired by recent large-scale graph processing systems (e.g., Pregel and Giraph) which are designed based on the message-passing model of distributed computing.

Our model consists of a point-to-point communication network of $$k$$ machines interconnected by bandwidth-restricted links. Communicating data between the machines is the costly operation (as opposed to local computation). The network is used to process an arbitrary $$n$$-node input graph (typically $$n >> k > 1$$) that is randomly partitioned among the $$k$$ machines (a common implementation in many real world systems). Our goal is to study fundamental complexity bounds for solving graph problems in this model.

We present techniques for obtaining lower bounds on the distributed time complexity. Our lower bounds develop and use new bounds in random-partition communication complexity. We first show a lower bound of $$\Omega(n/k)$$ rounds for computing a spanning tree (ST) of the input graph. This result also implies the same bound for other fundamental problems such as computing a minimum spanning tree (MST). We also show an $$\Omega(n/k^2)$$ lower bound for connectivity, ST verification and other related problems.

We give algorithms for various fundamental graph problems in our model. We show that problems such as PageRank, MST, connectivity, and graph covering can be solved in $$\tilde{O}(n/k)$$ time, whereas for shortest paths, we present algorithms that run in $$\tilde{O}(n/\sqrt{k})$$ time (for $$(1+\epsilon)$$-factor approx.) and in $$\tilde{O}(n/k)$$ time (for $$O(\log n)$$-factor approx.) respectively.

The author’s state their main goal is:

…is to investigate the distributed time complexity, i.e., the number of distributed “rounds”, for solving various fundamental graph problems. The time complexity not only captures the (potential) speed up possible for a problem, but it also implicitly captures the communication cost of the algorithm as well, since links can transmit only a limited amount of bits per round; equivalently, we can view our model where instead of links, machines can send/receive only a limited amount of bits per round (cf. Section 1.1).

How would you investigate the number of “rounds,” to perform merging in a message passing topic map system?

With no one order of merging to reach a particular state, would you measure it statistically for some merging criteria N?

I first saw this in a tweet by Stefano Bertolo.