Minerva: a fast and flexible system for deep learning

From the post:

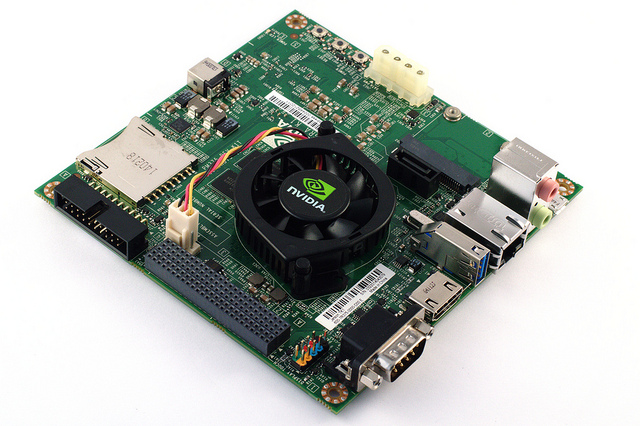

Minerva is a fast and flexible tool for deep learning. It provides NDarray programming interface, just like Numpy. Python bindings and C++ bindings are both available. The resulting code can be run on CPU or GPU. Multi-GPU support is very easy. Please refer to the examples to see how multi-GPU setting is used.Minerva is a fast and flexible tool for deep learning. It provides NDarray programming interface, just like Numpy. Python bindings and C++ bindings are both available. The resulting code can be run on CPU or GPU. Multi-GPU support is very easy. Please refer to the examples to see how multi-GPU setting is used.

Features

- Matrix programming interface

- Easy interaction with NumPy

- Multi-GPU, multi-CPU support

- Good performance: ImageNet AlexNet training achieves 213 and 403 images/s with one and two Titan GPU, respectivly. Four GPU cards number will be coming soon.

I first saw this in a blog post by Danny Bickson, Minerva: open source deep learning on GPU software from MS.

Deep learning is gaining traction fast. Fast enough that when government contractors convince the FBI wire tapping is no long a matter of plugging into the local junction box, they may start working on deep learning.

Before deep learning gets to that point, defensive measures against deep learning need to be developed. Given the variety of deep learning approaches and algorithms that is going to be a real challenge.

Perhaps immutable data structures where copying enables real time performance in presenting results that are expected? While maintaining a copy of the unexpected results?

I think there is a presumption that on querying, information systems repeat the information they have stored. That’s a fairly naive view of data storage. We know it is a matter of permissions to “see” data. Why shouldn’t the answer you see also depend upon permissions?

Defenses against deep learning and reactive data storage may become very relevant in the not too distant future. Give it some thought.