The Limitations of Deep Learning in Adversarial Settings by Nicolas Papernot, et al.

Abstract:

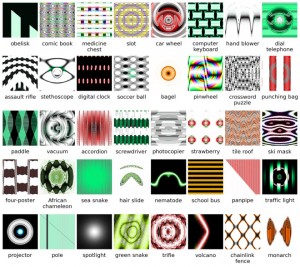

Deep learning takes advantage of large datasets and computationally efficient training algorithms to outperform other approaches at various machine learning tasks. However, imperfections in the training phase of deep neural networks make them vulnerable to adversarial samples: inputs crafted by adversaries with the intent of causing deep neural networks to misclassify. In this work, we formalize the space of adversaries against deep neural networks (DNNs) and introduce a novel class of algorithms to craft adversarial samples based on a precise understanding of the mapping between inputs and outputs of DNNs. In an application to computer vision, we show that our algorithms can reliably produce samples correctly classified by human subjects but misclassified in specific targets by a DNN with a 97% adversarial success rate while only modifying on average 4.02% of the input features per sample. We then evaluate the vulnerability of different sample classes to adversarial perturbations by defining a hardness measure. Finally, we describe preliminary work outlining defenses against adversarial samples by defining a predictive measure of distance between a benign input and a target classification.

I recommended deep learning for parsing lesser known languages earlier today. The utility of deep learning isn’t in doubt, but its vulnerability to “adversarial” input should give us pause.

Adversarial input isn’t likely to be labeled as such. In fact, it may be concealed in ordinary open data that is freely available for download.

As the authors note, the more prevalent deep learning becomes, the greater the incentive for the manipulation of input into a deep neural network (DNN).

Although phrased as “adversaries,” the manipulation of input into DNNs isn’t limited to the implied “bad actors.” The choice or “cleaning” of input could be considered manipulation of input, from a certain point of view.

This paper is notice that input into a DNN is as important in evaluating its results as as any other factor, if not more so.

Or to put it more bluntly, no disclosure of DNN data = no trust of DNN results.