Australia’s call for a boycott on U.S. travel until gun-reform is passed may be the high point of the international hysteria over gun violence in the United States. Or it may not be. Hard to say at this point.

Social media has been flooded with hand wringing over the loss of “innocent” lives, etc., you know the drill.

The victims in Oregon were no doubt “innocent,” but innocence alone isn’t the criteria by which “mass murder” is judged.

At least not according to both the United States government, other Western governments and their affiliated news organizations.

Take the Los Angeles Times for example, which has an updated list of mass shootings, 1984 – 2015.

Or the breathless prose of The Chicagoist in Chicago Dominates The U.S. In Mass Shootings Count.

Based on data compiled by the crowd-sourced Mass Shooting Tracker site, the Guardian discovered that there were 994 mass shootings—defined as an incident in which four or more people are shot—in 1,004 days since Jan. 1, 2013. The Oregon shooting happened on the 274th day of 2015 and was the 294th mass shooting of the year in the U.S.

Some 294 mass shootings since January 1, 2015 in the U.S.?

Chump change my friend, chump change.

No disrespect to the innocent dead, wounded or their grieving families, but as I said, “innocence isn’t the criteria for judging mass violence. Not by Western governments, not by the Western press.

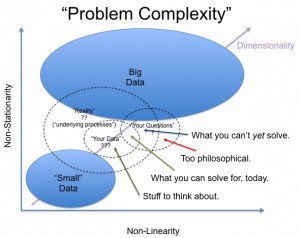

You will have to do a little data mining to come to that conclusion but if you have the time, follow along.

First, of course, we have to find acts of violence with no warning to its innocent victims who were just going about their lives. At least until pain and death came raining out of the sky.

Let’s start with Operation Inherent Resolve: Targeted Operations Against ISIL Terrorists.

If you select a country name, your options are Syria and Iraq, a pop-up will display the latest news briefing on “Airstrikes in Iraq and Syria.” Under the current summary, you will see “View Information on Previous Airstrikes.”

Selecting “View Information on Previous Airstrikes” will give you a very long drop down page with previous air strike reports. It doesn’t list human casualties or the number of bombs dropped, but it does recite the number of airstrikes.

Capture that information down to January 1, 2015 and save it to a text file. I have already captured it and you can download us-airstrikes-iraq-syria.txt.

You will notice that the file has text other than the air strikes, but air strikes are reported in a common format:

- Near Al Hasakah, three strikes struck three separate ISIL tactical units

and destroyed three ISIL structures, two ISIL fighting positions, and an

ISIL motorcycle.

- Near Ar Raqqah, one strike struck an ISIL tactical unit.

- Near Mar’a, one strike destroyed an ISIL excavator.

- Near Washiyah, one strike damaged an ISIL excavator.

Your first task is to extract just the lines that start with: “- Near” and save them to a file.

I used: grep '\- Near' us-airstrikes-iraq-syria.txt > us-airstrikes-iraq-syria-strikes.txt

Since I now have all the lines with airstrike count data, how do I add up all the numbers?

I am sure there is an XQuery solution but its throw-away data , so I took the easy way out:

grep 'one airstrike' us-airstrikes-iraq-syria-strikes.txt | wc -l

Which gave me a count of all the lines with “one airstrike,” or 629 if you are interested.

Just work your way up through “ten airstrikes” and after that, nothing but zeroes. Multiple the number of lines times the number in the search expression and you have the number of airstrikes for that number. One I found was 132 for “four airstrikes,” so that was 528 airstrikes for that number.

Oh, I forgot to mention, some of the reports don’t use names for numbers but digits. Yeah, inconsistent data.

The dirty answer to that was:

grep '[0-9] airstrikes' us-airstrikes-iraq-syria-strikes.txt > us-airstrikes-iraq-syria-strikes-digits.txt

The “[0-9]” detects any digit, between zero and nine. Could have made it a two-digit number but any two-digit number starts with one digit so why bother?

Anyway, that found another 305 airstrikes that were reported in digits.

Ah, total number of airstrikes, not bombs but airstrikes since January 1, 2015?

4,207 airstrikes as of today.

That’s four thousand, two hundred and seven (minimum, more than one bomb per airstrike), times that innocent civilians may have been murdered or at least terrorized by violence falling out of the sky.

Those 4,207 events were not the work of marginally functional, disturbed or troubled individuals. No, those events were orchestrated by highly trained, competent personnel, backed by the largest military machine on the planet and a correspondingly large military industrial complex.

I puzzle over the international hysteria over American gun violence when the acts are random, unpredictable and departures from the norm. Think of all the people with access to guns in the United States who didn’t go on violent rampages.

The other puzzlement is that the crude data mining I demonstrated above establishes the practice of violence against innocents is a long standing and respected international practice.

Why stress over 294 mass shootings in the U.S. when 4,207 airstrikes in 2015 have killed or endangered equally innocent civilians who are non-U.S. citizens?

What is fair for citizens of one country should be fair for citizens of every country. The international community seems to be rather selective when applying that principle.