Data Insights from the NFL’s ‘Play by Play’ Dataset by Jesse Anderson.

From the post:

In a recent GigaOM article, I shared insights from my analysis of the NFL’s Play by Play Dataset, which is a great metaphor for how enterprises can use big data to gain valuable insights into their own businesses. In this follow-up post, I will explain the methodology I used and offer advice for how to get started using Hadoop with your own data.

To see how my NFL data analysis was done, you can view and clone all of the source code for this project on my GitHub account. I am using Hadoop and its ecosystem for this processing. All of the data for this project uses the NFL 2002 season to the 4th week of the 2013 season.

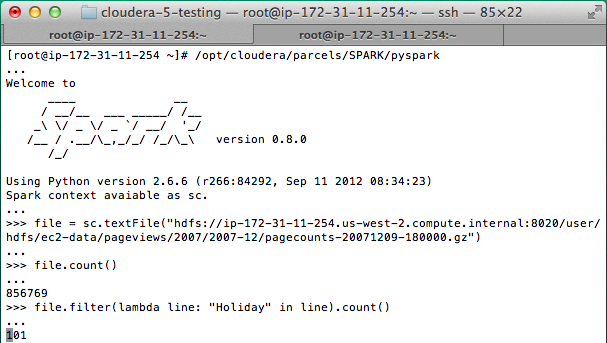

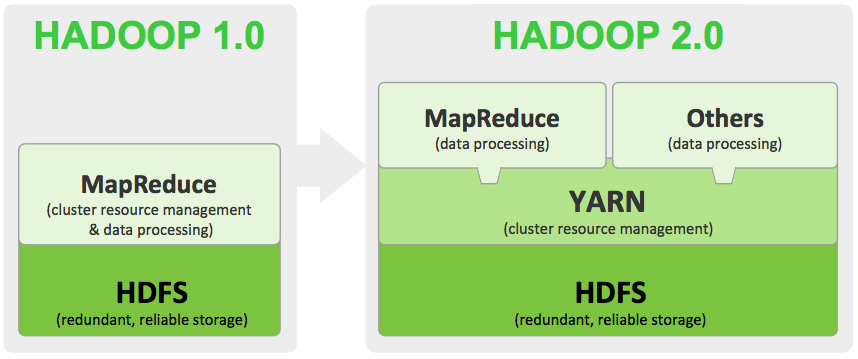

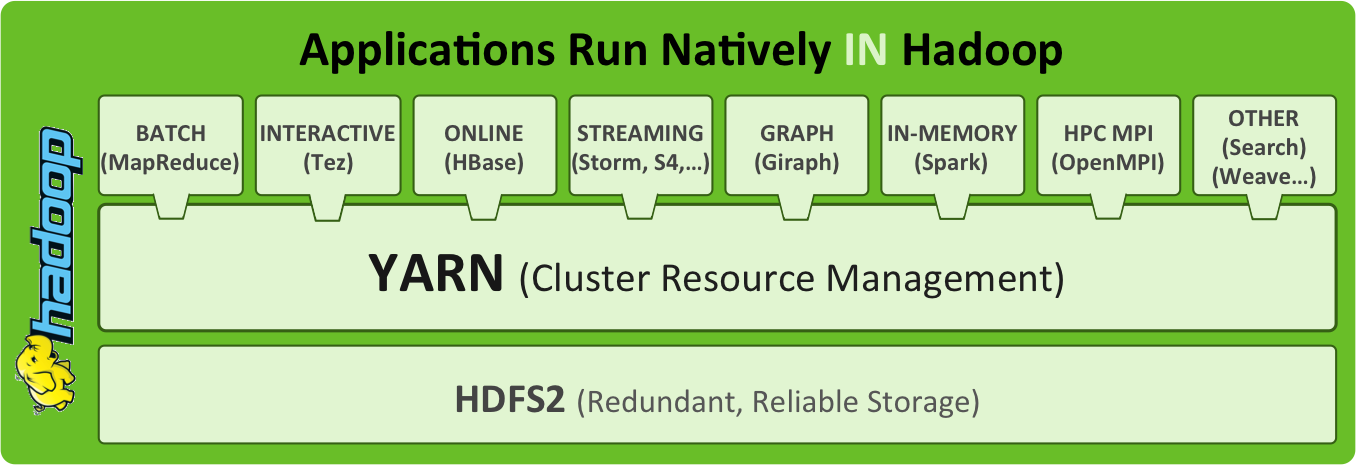

Two MapReduce programs do the initial processing. These programs process the Play by Play data and parse out the play description. Each play has unstructured or handwritten data that describes what happened in the play. Using Regular Expressions, I figured out what type of play it was and what happened during the play. Was there a fumble, was it a run or was it a missed field goal? Those scenarios are all accounted for in the MapReduce program.

Just in case you aren’t interested in winning $1 billion at basketball or you just want to warm up for that challenge, try some NFL data on for size.

Could be useful in teaching you the limits of analysis. For all the stats that can be collected and crunched, games don’t always turn out as predicted.

On any given Monday morning you may win or lose a few dollars in the office betting pool, but number crunching is used for more important decisions as well.