Putting Linked Data on the Map by Richard Wallis.

In fairness to Linked Data/Semantic Web, I really should mention this post by one of its more mainstream advocates:

Show me an example of the effective publishing of Linked Data – That, or a variation of it, must be the request I receive more than most when talking to those considering making their own resources available as Linked Data, either in their enterprise, or on the wider web.

There are some obvious candidates. The BBC for instance, makes significant use of Linked Data within its enterprise. They built their fantastic Olympics 2012 online coverage on an infrastructure with Linked Data at its core. Unfortunately, apart from a few exceptions such as Wildlife and Programmes, we only see the results in a powerful web presence. The published data is only visible within their enterprise.

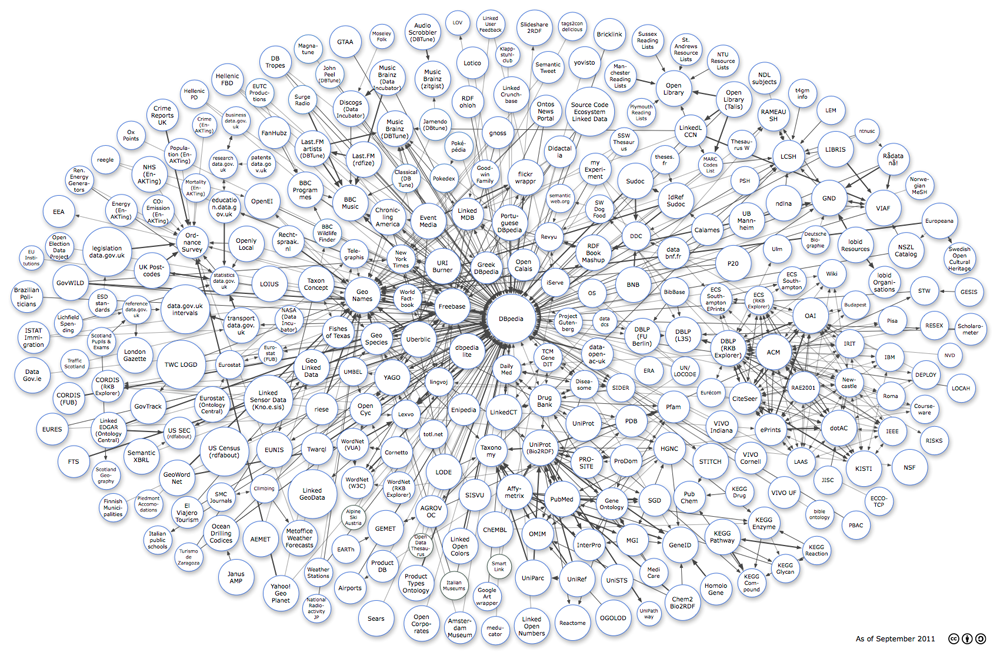

Dbpedia is another excellent candidate. From about 2007 it has been a clear demonstration of Tim Berners-Lee’s principles of using URIs as identifiers and providing information, including links to other things, in RDF – it is just there at the end of the dbpedia URIs. But for some reason developers don’t seem to see it as a compelling example. Maybe it is influenced by the Wikipedia effect – interesting but built by open data geeks, so not to be taken seriously.

A third example, which I want to focus on here, is Ordnance Survey. Not generally known much beyond the geographical patch they cover, Ordnance Survey is the official mapping agency for Great Britain. Formally a government agency, they are best known for their incredibly detailed and accurate maps that are the standard accessory for anyone doing anything in the British countryside. A little less known is that they also publish information about post-code areas, parish/town/city/county boundaries, parliamentary constituency areas, and even European regions in Britain. As you can imagine, these all don’t neatly intersect, which makes the data about them a great case for a graph based data model and hence for publishing as Linked Data. Which is what they did a couple of years ago.

The reason I want to focus on their efforts now, is that they have recently beta released a new API suite, which I will come to in a moment. But first I must emphasise something that is often missed.

Linked Data is just there – without the need for an API the raw data (described in RDF) is ‘just there to consume’. With only standard [http] web protocols, you can get the data for an entity in their dataset by just doing a http GET request on the identifier…

(images omitted)

Richard does a great job describing the Linked Data APIs from the Ordnance Survey.

My only quibble is with his point:

Linked Data is just there – without the need for an API the raw data (described in RDF) is ‘just there to consume’.

True enough but it omits the authoring side of Linked Data.

Or understanding the data to be consumed.

With HTML, authoring hyperlinks was only marginally more difficult than “using” hyperlinks.

And the consumption of a hyperlink, beyond mime types, was unconstrained.

So linked data isn’t “just there.”

It’s there with an authoring burden that remains unresolved and that constrains consumption, should you decide to follow “standard [http] web protocols” and Linked Data.

I am sure the Ordnance Survey Linked Data and other Linked Data resources Richard mentions will be very useful, to some people in some contexts.

But pretending Linked Data is easier than it is, will not lead to improved Linked Data or other semantic solutions.