Cool Interactive experiments of 2014

From the post:

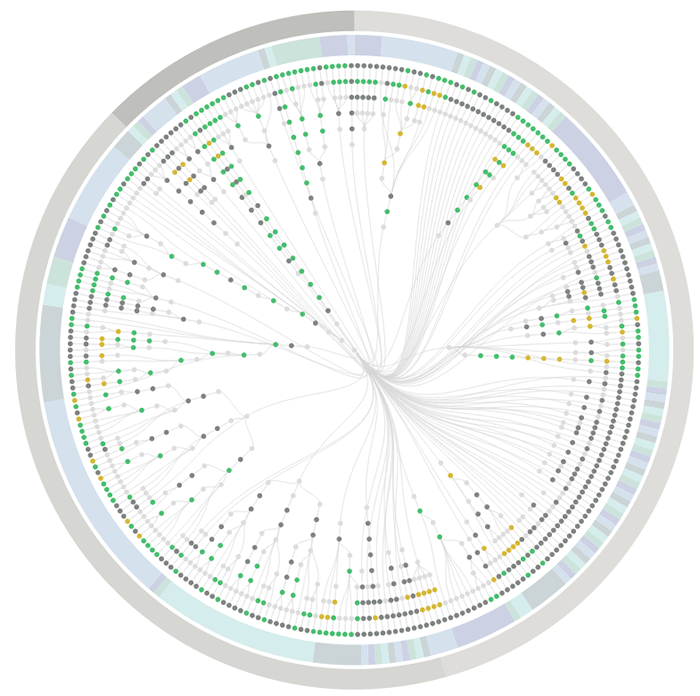

As we continue to look back at 2014, in search of the most interesting, coolest and useful pieces of content that came to our attention throughout the year, it’s only natural that we find projects that, despite being much less known and spoken of by the data visualization community than the ones of “The New York Times” or “The Washington Post”, have a certain “je ne sais quoi” to it, either it’s the project’s overall aesthetics, or the type of the data visualized.

Most of all, these projects show how wide the range of what visualization can be used for, outside the pressure of a client, a deadline or a predetermined tool to use. Self-promoting pieces, despite the low general value they might have, still play a determinant role in helping information designers test and expand their skills. Experimentation is at the core of this exciting time we are living in, so we gathered a couple of dozens of visual experiments that we had the opportunity to feature in our weekly “Interactive Inspiration” round ups, published every Friday.

Very impressive! I will just list the titles for you here:

- The Hobbit | Natalia Bilenko, Asako Miyakawa

- Periodic Table of Storytelling | James Harris

- Graph TV | Kevin Wu

- Beer Viz | Divya Anand, Sonali Sharma, Evie Phan, Shreyas

- One Human Heartbeat | Jen Lowe

- We can do better | Ri Liu

- F1 Scope | Michal Switala

- F1 Timeline | Peter Cook

- The Largest Vocabulary in Hip hop | Matt Daniels

- History of Rock in 100 Songs | Silicon Valley Data Science

- When sparks fly | Lam Thuy Vo

- The Colors of Motion | Charlie Clark

- World Food Clock | Luke Twyman

- Score to Chart | Davide Oliveri

- Culturegraphy | Kim Albrecht

- The Big Picture | Giulio Fagiolini

- Commonwealth War Dead: First World War Visualised | James Offer

- The Pianogram | Joey Cloud

- Faces per second in episodes of House of Cards TV Series | Virostatiq

- History Words Flow | Santiago Ortiz

- Global Weirding | Cicero Bengler

If they have this sort of eye candy every Friday, mark me down as a regular visitor to VisualLoop.

BTW, I could have used XSLT to scrape the titles from the HTML but since there weren’t any odd line breaks, a regex in Emacs did the same thing with far fewer keystrokes.

I sometimes wonder if “interactive visualization” focuses too much on the visualization reacting to our input? After all, we are already interacting with visual stimuli in ways I haven’t seen duplicated on the image side. In that sense, reading books is an interactive experience, just on the user side.