Tensions surround release of new Rosetta comet data by Eric Hand.

From the post:

…

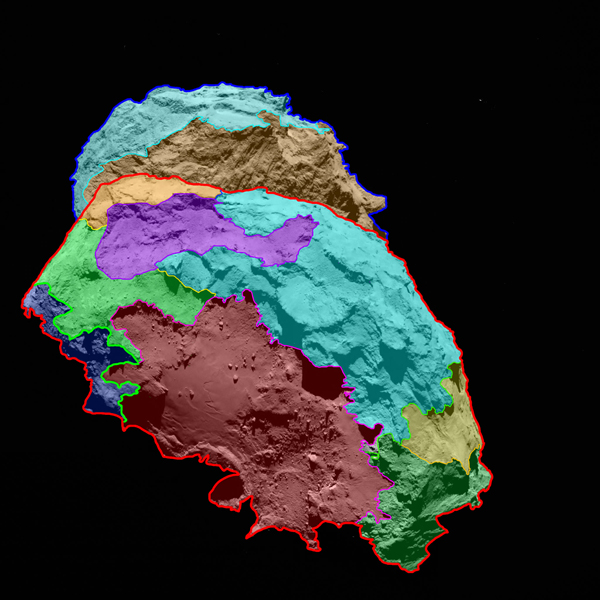

For the Rosetta mission, there is an explicit tension between satisfying the public with new discoveries and allowing scientists first crack at publishing papers based on their own hard-won data. “There is a tightrope there,” says Taylor, who’s based at ESA’s European Space Research and Technology Centre (ESTEC) in Noordwijk, the Netherlands. But some ESA officials are worried that the principal investigators for the spacecraft’s 11 instruments are not releasing enough information. In particular, the camera team, led by principal investigator Holger Sierks, has come under special criticism for what some say is a stingy release policy. “It’s a family that’s fighting, and Holger is in the middle of it, because he holds the crown jewels,” says Mark McCaughrean, an ESA senior science adviser at ESTEC.

Allowing scientists to withhold data for some period is not uncommon in planetary science. At NASA, a 6-month period is typical for principal investigator–led spacecraft, such as the MESSENGER mission to Mercury, says James Green, the director of NASA’s planetary science division in Washington, D.C. However, Green says, NASA headquarters can insist that the principal investigator release data for key media events. For larger strategic, or “flagship,” missions, NASA has tried to release data even faster. The Mars rovers, such as Curiosity, have put out images almost as immediately as they are gathered.

…

Sierks, of the Max Planck Institute for Solar System Research in Göttingen, Germany, feels that the OSIRIS team has already been providing a fair amount of data to the public—about one image every week. Each image his team puts out is better than anything that has ever been seen before in comet research, he says. Furthermore, he says other researchers, unaffiliated with the Rosetta team, have submitted papers based on these released images, while his team has been consumed with the daily task of planning the mission. After working on OSIRIS since 1997, Sierks feels that his team should get the first shot at using the data.

“Let’s give us a chance of a half a year or so,” he says. He also feels that his team has been pressured to release more data than other instruments. “Of course there is more of a focus on our instrument,” which he calls “the eyes of the mission.”

…

What if there was another solution to the Rosetta “tensions” than 1) privilege researchers with six (6) months exclusive access to data or 2) release data as soon as gathered?

I am sure everyone can gather arguments for one or the other of those sides but either gathering or repeating them isn’t going to move the discussion forward.

What if there were an agreed upon registry for data sets (not a repository but registry) where researchers could register anticipated data and, when acquired, the date the data was deposited to a public repository and a list of researchers entitled to publish using that data?

The set of publications in most subject areas are rather small and if they agreed to not accept or review papers based upon registered data, for six (6) months or some other agreed upon period, that would enable researchers to release data as acquired and yet protect their opportunity for first use of the data for publication purposes.

This simple sketch leaves a host of details to explore and answer but registering data for publication delay could answer the concerns that surround publicly funded data in general.

Thoughts?