Mapping Research With WikiMaps

From the post:

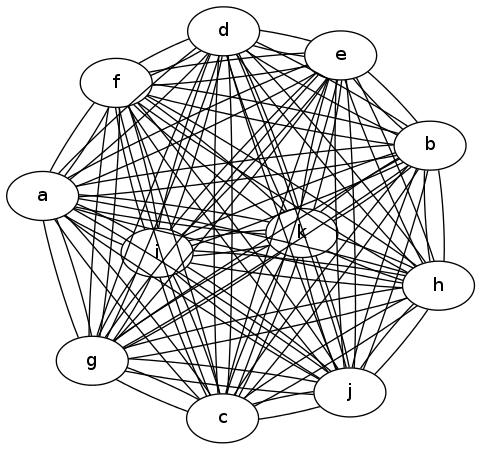

An international research team has developed a dynamic tool that allows you to see a map of what is “important” on Wikipedia and the connections between different entries. The tool, which is currently in the “alpha” phase of development, displays classic musicians, bands, people born in the 1980s, and selected celebrities, including Lady Gaga, Barack Obama, and Justin Bieber. A slider control, or play button, lets you move through time to see how a particular topic or group has evolved over the last 3 or 4 years. The desktop version allows you to select any article or topic.

Wikimaps builds on the fact that Wikipedia contains a vast amount of high-quality information, despite the very occasional spot of vandalism and the rare instances of deliberate disinformation or inadvertent misinformation. It also carries with each article meta data about the page’s authors and the detailed information about every single contribution, edit, update and change. This, Reto Kleeb, of the MIT Center for Collective Intelligence, and colleagues say, “…opens new opportunities to investigate the processes that lie behind the creation of the content as well as the relations between knowledge domains.” They suggest that because Wikipedia has such a great amount of underlying information in the metadata it is possible to create a dynamic picture of the evolution of a page, topic or collection of connections.

See the demo version: http://www.ickn.org/wikimaps/.

For some very cutting edge thinking, see: Intelligent Collaborative Knowledge Networks (MIT) which has a download link to “Condor,” a local version of the wikimaps software.

Wikimaps builds upon a premise similar to the original premise of the WWW. Links break, deal with it. Hypertext systems prior to the WWW had tremendous overhead to make sure links remained viable. So much overhead that none of them could scale. The WWW allowed links to break and to be easily created. That scales. (The failure of the Semantic Web can be traced to the requirement that links not fail. Just the opposite of what made the WWW workable.)

Wikimaps builds upon the premise that the “facts we have may be incomplete, incorrect, partial or even contradictory. All things that most semantic systems posit as verboten. An odd requirements since our information is always incomplete, incorrect (possibly), partial or even contradictory. We have set requirements for our information systems that we can’t meet working by hand. Not surprising that our systems fail and fail to scale.

How much information failure can you tolerate?

A question that should be asked of every information system at the design stage. If the answer is none, move onto a project with some chance of success.

I was surprised at the journal reference, not one I would usually scan. Recent origin, expensive, not in library collections I access.

Journal reference:

Reto Kleeb et al. Wikimaps: dynamic maps of knowledge. Int. J. Organisational Design and Engineering, 2012, 2, 204-224

Abstract:

We introduce Wikimaps, a tool to create a dynamic map of knowledge from Wikipedia contents. Wikimaps visualise the evolution of links over time between articles in different subject areas. This visualisation allows users to learn about the context a subject is embedded in, and offers them the opportunity to explore related topics that might not have been obvious. Watching a Wikimap movie permits users to observe the evolution of a topic over time. We also introduce two static variants of Wikimaps that focus on particular aspects of Wikipedia: latest news and people pages. ‘Who-works-with-whom-on-Wikipedia’ (W5) links between two articles are constructed if the same editor has worked on both articles. W5 links are an excellent way to create maps of the most recent news. PeopleMaps only include links between Wikipedia pages about ‘living people’. PeopleMaps in different-language Wikipedias illustrate the difference in emphasis on politics, entertainment, arts and sports in different cultures.

Just in case you are interested: International Journal of Organisational Design and Engineering, Editor in Chief: Prof. Rodrigo Magalhaes, ISSN online: 1758-9800, ISSN print: 1758-9797.